Nvidia and Google’s Competing Paths in AI Compute

The phrase "monopoly in a global capitalistic market" contradicts itself. Nonetheless, within AI hardware, Nvidia GPUs are the standard by which all other hardware is measured.

Historically, semi-monopolistic companies, existing within free markets, do not maintain their status indefinitely; market forces treat all players equally and whatever advantage its competitors enjoy peaks and falls to where it was pre-existing, as shown in the dramatic downfall of Cisco Systems — a hardware industry leader in the early 2000s, which saw its stock price plunge dramatically when other organizations began producing similar products.

Nvidia's current financials appear healthy as of November 2025, showing 36% YOY growth with record profits of $57.01 billion versus analyst expectations of $54.2B and an EPS of $1.30 vs $1.25 expectations, with burgeoning data center revenue increasing by 112% YOY.

Similar to Cisco systems, NVDA stock faces a major threat from competitors who are already producing chips that have greater performance and cost efficiency than NVDA’s GPU products. Specifically, hyperscalers–companies that have large amounts of web traffic, such as Google, Meta and Amazon–have been developing custom chips for their server farms for some time. Google developed TPUs (Tensor Processing Units), Meta has developed a dedicated mtia device and Amazon has released Trainium processors.

One of the most interesting aspects of this situation is that many of NVDA’s largest competition companies are also NVDA’s largest customers. Therefore, this creates a major concern for NVDA stock due to extremely high customer concentration. In fact, the majority of NVDA chip sales go to exactly five customers (Microsoft, Meta, Amazon, Google and Oracle) who have recently invested billions developing technologies to compete with NVDA on an equal or better level. As a result, should any of these companies significantly decrease their purchases from NVDA, NVDA would feel the effects almost immediately.

Supporting this concern is how NVDA stock has moved recently. After NVDA announced record level profits, NVDA stock was up approximately 5% after hours but then fell approximately 3.2% the next day after Google announced their latest LLM model: Gemini 3. As seen on the heatmap, the competition is heating up and currently, Gemini 3 appears to be one of the most powerful LLM models and has considerable superiority to competing models. Additionally, one of the more important features of Gemini 3 is that it is trained entirely using Google’s proprietary TPUs instead of NVDA GPUs.

The TPUs from Google, which were developed specifically to be used to train AI models, offer advantages in terms of economics and energy consumption (less expensive and more energy-efficient) over Nvidia GPUs (which were originally designed for gaming) and later became the AI industry standard. Recent comments by Nvidia CEO Huang regarding the "serious difficulty" companies would face if they switched to a platform other than Nvidia show the extent to which he is determined to keep a monopolistic position and demonstrates some of the anxiety that he feels about his company's ability to retain that position.

Compared with Nvidia's GPUs, Google's TPUs have lower inference costs (Google's internal report states TPUs have at least four times lower inference cost) and require 67% less energy to operate, creating a significant crack in Nvidia's monopoly and already prompting discussions about deals that began in late November.

Anthropic has secured an agreement to purchase another million TPUs; the estimated value of this deal is $42 billion. Meta is also planning to rent TPUs via Google Cloud in 2026 and build on-premise TPU pods in its data centres by 2027, even though it continues to be Nvidia's largest client (with planned capital expenditures of $70-72 billion in 2025).

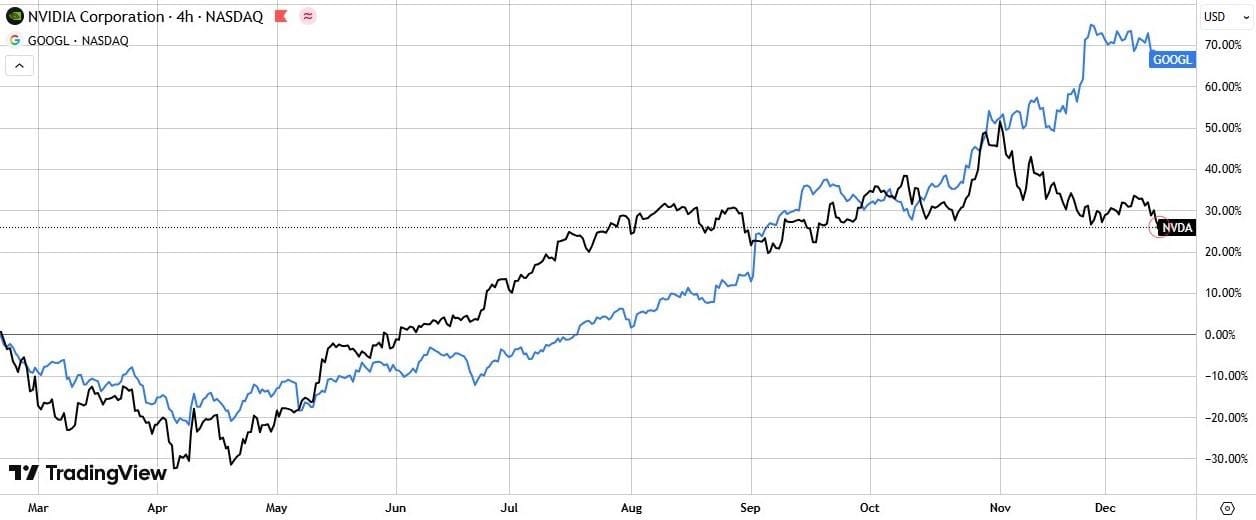

Comparing the performance of Nvidia and Google over the last several months reveals that while overall performance has been fairly similar since the April lows, the performance separation between the two began to widen in late November.

Hardware is only part of the equation, and Nvidia has an overwhelming advantage in software. The primary platforms for AI development and large-scale deployment are CUDA, cuDNN, and TensorRT because they are the standard APIs used in AI development. When switching from CUDA to XLA (the TPU Compiler), developers must rewrite or retune their code, deal with multiple performance bottlenecks, and be restricted in portability across multiple cloud providers. Nvidia can leverage the advantages of AWS, Azure, and Google Cloud, as well as different AI frameworks such as PyTorch, MXNet, and Keras. However, because TPUs are only available through Google Cloud, Google has complete control over the infrastructure and services used to build and deploy AI applications, which limits developer flexibility. If Google elected to raise their rates, all developers using TPUs would have to rewrite their applications to work with TPUs.

So, for now, this is why many companies hold off on investing heavily in TPUs even though they are cheaper than other alternatives.

Conclusions

In 2025, Nvidia and Google have diverged noticeably in their performance in the market for artificial intelligence due to changing AI market dynamics. Nvidia has historically dominated in training various models and in offering flexibility in terms of software but at present has lost market share in AI accelerators, where Google’s TPUs have proven to be both technically and economically superior at scale.

The trajectory of the AI market moving forward is shifting from a purely GPU-based primary ecosystem to one that is heterogeneous. Hence analysts and investors must place considerable weight on the context of the AI market each day due to its ongoing evolution. Although Nvidia continues to be a significant player in artificial intelligence, the time of its unchecked dominance is coming to an end.

The primary consideration for the future of the AI market is no longer “GPU vs TPU?” Instead, companies seeking to leverage AI for business purposes should evaluate which combination of conv/convs and TPUs provides optimal performance/cost/flexibility for their particular workload. In this emerging environment, both GPU/TPU architectures will be vital, but market concentration and resultant stock prices/leverage will increasingly be driven by who captures value in this rapidly expanding market.